Containerisation: What and Why?

Containerisation is one of those technologies that - whilst I have frequently interacted with it and do build solutions using it - I have never quite grasped the theory of. That's not due to struggling to understand containers but it is just that I have never truly deep-dived into it as a technology.

My understanding of containerisation has always been that containers are lightweight virtualisation solution that can run anywhere regardless of the host operating system (OS). For the most part, that understanding has been perfectly fine and accurate enough for what I have needed to build. As I have started building more complex solutions requiring containers, I thought that perhaps I should do a deeper dive into containers.

The Problem

Traditionally, software has had to be tailored to whatever specific version of the OS that the host of the software, such as a PC or server, is running. Windows packages have needed to be installed on the correct Windows version and likewise for Linux. This can be quite a tedious process and indeed can cause a whole load of issues, bugs, errors and the like.

Virtual machines (VMs) somewhat solved this, but developers still needed to care about the VM's OS. We will look into VMs in a moment.

Containers are abstracted away from the OS of the host. This allows containers to be portable and run across any platform; containers allow for software applications to be written once and read anywhere, thus reducing the time taken to create software for multiple platforms.

Virtualisation vs. Containerisation vs. Serverless

Virtualisation

Virtualisation is the process for hosting virtual computers - Virtual Machines (VMs) - on a host. VMs have their own OS bundled with them. If you want to have Linux VM, you package Linux with it; if you want a MacOS VM, you package MacOS with it, etc. They allow multiple operating systems to run on a host at the same time, rather than requiring multiple servers. Each VM has its own file system and libraries bundled with it.

Virtualisation relies on a hypervisor. Hypervisors are a small software layer that enables VMs to run their own OS on the host computer. From the host, the hypervisor pools the computing power, storage and memory of the host. Hypervisors can run either directly on the hardware (a Type 1 hypervisor) or as an application on a host OS (a Type 2 hypervisor).

The key issue with virtualisation is also what is core to VMs: the need to bundle an OS with the VM. This is not necessarily a problem per se, in some instances you may want a separate OS on a host. If you are just using VMs to run software applications without the need for separate host then VMs are more heavyweight than the other solution - containerisation.

Containerisation

Containers are smaller in capacity than VMs and require less start-up time. Why? Because they do not bundle with their own operating system. Instead containers rely on what is known as a container engine. You may have heard of some of these without realising: Docker and Podman are both container engines. Container engines interface with the host's OS, which alleviates the need for bundling their own OS.

Containerisation creates a single executable piece of software that bundles all of an application's code and dependencies together. When running, these bundled applications become containers. These containers are created from images, which are templates of instructions that are read from to create the container. The application code, config, libraries and dependencies that are required to run the application are all bundled into the executable package. A point I will labour is that they not bundled with an OS.

Container engines utilise the host OS' kernel. If a compatible one does not exist on the host then a lightweight OS kernel will be provisioned to ensure that the containers can run. As an end-user you often do not need to directly interact with this kernel; you just need to be aware that it is provisioned for you. Docker for example will provision a lightweight Linux kernel when running on Windows to ensure the smooth running of the containers; if running on Linux, it will interact directly with the Linux kernel.

As containers do not bundle their own OS - relying on the container engine to interface with the host OS - they are more lightweight than VMs. They are smaller in capacity than VMs - which allow for more containers on a host for the same resource cost - and have quicker start-up times than a VMs.

Containers running on the same container engine can also share common binaries and libraries, which eliminates some overhead.

The Open Container Initiative (OCI) is an initiative for creating and promoting common, minimal and open industry standards relating to the formats and runtimes of containers. It was established in 2015 to allow for broader choices for open-source container engines and to allow users to avoid vendor lock-in.

Serverless

You may be thinking, that's great but what about serverless? That is also lightweight.

Serverless is where the cloud provider fully manages the infrastructure and allows developers to run applications without having to worry about the provisioning of servers and scaling of resources. Whilst it allows for somewhat easier deployment of applications, serverless does take away some control from developers as developers have less control of the underlying infrastructure.

Containers focus on portability, but do give developers complete control without having to think about libraries and dependencies interfacing well with the host OS. Cloud providers do also allow for the hosting of containers.

Architecture

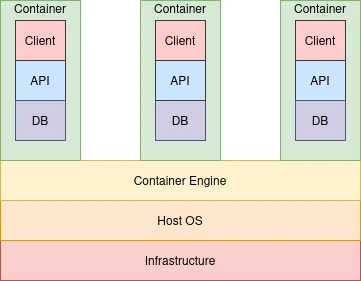

Container architecture has four layers.

The first layer is the infrastructure. This is the IT infrastructure, i.e. the host computer. This layer is the bare-metal server of physical computer on top of which sits the remaining architecture. The host runs an operating system on top of which sits the container engine.

The container engine, also known as a run-time engine, runs on top of the host OS and virtualises the OS' resources which are then allocated to the containers. It acts as the intermediary agent between the container images and the host's OS. Container engines also manage the application's requirements in terms of resources.

On top of the container engine sits the containers - the applications and their dependencies. The software application runs from the images that is attached to the container engine to create the containers.

Images, Layers, Repositories and Registries

As discussed, a container image is a template to create containers. Images are stored in repositories - which are collections of images that have the same name but different tags. A tag is used to denote the version of an image. A container registry is a collection of different repositories. When a container image is pushed to a registry, it is associated with a manifest. A manifest uniquely identifies images using a SHA-256 hash which is calculated via the artifacts and layers associated with an image.

A layer is part of an image. When an image is modified, a layer is added to the image. A layer represents a file system modification. Layers can be reused and shared between images, which leads to faster builds and reduces the amount of storage required to distribute a container image but adds a security risk as if there is a security issue with a layer it will be shared between all containers that utilise that layer. If any metadata or layers change within an image, then the manifest hash will change.

Repositories are used for the management of images; registries store collections of repositories.

Composition

When building systems that rely on multiple containers, it can get quite tedious to have to pull the images one-by-one and restart those containers individually. You have to ensure that all the containers that depend on each other are started. This can be tedious even in the case of a small full-stack application.

Container composition solves this problem. Container composition

allows for developers to specify various details of containers of

multiple containers - such as container names, which ports are used

and more. They allow developers to define which containers are

required for an application to run; this can be done in a single file,

such as a docker-compose.yml file.

Once the composition is defined we can start each individual container from one command and file. For example rather than having to start a client, back-end and database individually we could just run the compose file which will handle the starting of each container. This is also the case for other steps we might want to run against the container, such as stopping the container. Each container that is run via composition runs on a single host; composition handles the networking and connecting of containers on that single host.

Orchestration

Orchestration refers to the running of clusters of containers across multiple machines and environments, whereas composition refers to the running of multiple containers on a single host.

A popular tool for performing orchestration for containers is Kubernetes (also known as K8). Cloud providers also provide orchestration tools, often utilising K8.

With orchestration, each cluster consists of a group of nodes. Each cluster contains worker nodes, which run containers on the container engine. A control plane of nodes acts as the orchestrator of the cluster it is attached to.

Orchestration follows a three step process:

- Provisioning: This is where the orchestration tool reads a config file which defines the container images and where they are located. The containers are then provisioned with specific versions of the dependencies. The network connections between containers are also created at this point.

- Deployment: Containers are deployed to their host. In K8, containers are deployed in Pods - a group of one or more containers with shared storage and network resources.

- Lifecycle management: Scaling, load balancing and resource allocation is managed in order to ensure the availability and good performance of the clusters. In the event of an outage, hosts may be changed. Telemetry information is collected to monitor the health and performance of the application that runs on the cluster. Orchestration tools can automatically start, stop, and manage containers.

Orchestration allows for more resilient applications, cost-efficiency and, perhaps most importantly, the automation of the provisioning and scaling of resources which - in addition to the aforementioned benefits - greatly reduces the manual effort and complexity of managing a large amount of containers. For large scale applications, it would be incredibly difficult to manage all of the required containers manually and also scale them precisely without human error - such as the under-provisioning of containers or over-utilising a host's resources.

Use Cases

Containerisation has many use-cases. A few include:

- Cloud Migrations: Organisations can encapsulate legacy applications into containers. These applications can then be deployed to a cloud environment. Organizations can then break out their applications into smaller pieces which can be hosted on containers and modernised piecemeal, rather than having to rewrite the whole software in one go.

- Microservices: Containerisation lends itself well to microservices, as the concepts are similar in that they transform applications from one large scale monolith into a collection of smaller, portable, scalable and efficient systems. Containers provide a lightweight encapsulation that can help with this.

Of course these are just some of the use cases, other do exist, such as creating quick environments for testing or even just having the benefit of hosting a full-stack application on composed containers.

Pros vs. Cons

As with most things, containerisation has many pros and cons. Often, the trade-offs are entangled together.

- Portability: Portability really is the main point of containerisation and is the concept's big pro. As containers are abstracted away from the host OS, they are able to run uniformly and consistently across any platform without the need for re-writing.

- Agility: The portability aspect also allows for more agile development, as developers can focus on the application code without having to worry as much about the host OS and hardware.

- Efficiency: As mentioned previously, containers can share common binaries and libraries, making applications running on containers more efficient via resource optimization. The lack of an OS being bundled with containers also makes them more resource efficient.

- Scalability: As mentioned in the orchestration section, auto-scaling can be implemented for containers. Their low resource cost also allows multiple containers to be running on the same host - which cuts time for scaling even more as the start-up times for containers are reduced further.

- Ease of management: Provisioning, deployment and management of containers can be automated, at the cost of having to set-up the orchestration and composition.

- Fault isolation: By separating out containers, the failure of one container does not cause all other containers to cease running.

- Security: The isolation of applications into containers reduces the chance that malicious code from one container will impact other containers and invade the host operating system. When dependencies are shared however, this risk is increased.

- Atomisation: With containers, complex applications can be broken down into a series of smaller, specialized and manageable services. For legacy systems this can be harder and deliberate design choices must be made to allow for this atomisation.

Conclusion

Containerisation really is a useful piece of technology. Whilst I had some understanding of the core concepts, I felt the need to do a deeper dive into it; I've shared my notes on this with you, and I hope you find it useful if you are looking to understand containers more yourself or if you are just getting started.

At a high-level, containers are the running instances of bundled applications created from container images. They run on a container engine which interfaces with a host OS to ensure that the containers can run anywhere. Containerisation allows write-once read-anywhere software solutions.

I myself have found containerisation particularly useful when working on small side-projects. With containerisation I need to worry less about where my applications will be hosted; with composition I can spin up a full-stack application in moments. Obviously, small side-projects pale in comparison to industrial projects - but the pros still remain even in more complex projects.