Test-Driven Development: Red, Green, Refactor!

Introduction

When I was first taught about test-driven development (TDD), I was taught it wrong. I believe - with only anecdotal evidence - that a large proportion of developers have also been taught TDD incorrectly.

I was first taught TDD when I was at university; I was taught it a second time when I first entered the technology industry. Both times, TDD was taught as something like this:

'Before writing any new code for functionality, first write a test for what it is you want to implement. Maybe write a few tests, depending on what it is you want to implement. Once you've written your tests you can move to programming functionality. You will know when you are done writing the functionality, because all of the tests will pass! Once your tests pass, move on the the next bit of functionality by repeating the same steps!'.

It never sat right with me. It never felt correct. It came across as busywork. That is because the above is not really what TDD is. I did a bit of studying of TDD and found some useful information that confirmed my underlying thoughts. I hope to outline these and teach you what TDD is meant to be.

One thing I will quickly state is that I am not particularly dogmatic about TDD. I like using it where it is appropriate and where I can. I am not dogmatic on using it all of the time, but I do think it helps me and others be better engineers.

The Rules of TDD

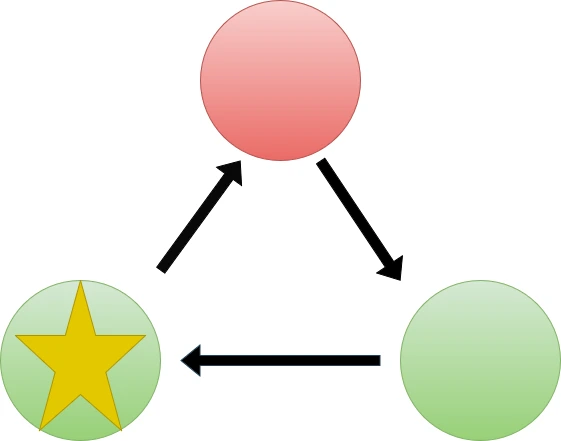

TDD follows a cycle. Normally, the most important of the steps within this cycle is what is forgotten - the final step.

A TDD cycle begins before you write any functional code, and it goes like this:

- Write a test for what you want to implement. It will not pass, it might not even compile and that is fine!

- Make the test pass in the quickest way possible. Do not worry about making the code clean and well engineered. Have fun, commit coding sins and just get the code running as fast as possible!

- All those sins you committed? I am sorry to say that now you need to fix them. You need to refactor them out.

This is known as the red, green, refactor pattern. Red is when you write a test and it fails; green is getting the test to pass as quickly as possible; refactor is tidying up all of the functional code you have just written.

I understand why the refactor step often gets skipped out. You might be thinking that the test passes, so the code is good enough. That might be correct short term, but you want nice maintainable code; writing code to hit acceptance criteria as quickly as possible is rarely maintainable code.

Each of the steps in the TDD cycle serves a purpose.

The red step makes you think about the design of your contracts for your modules, classes and functions. As you are writing the tests, you are thinking about how your new code will be consumed.

The green step is about getting your code working and your tests passing for the next step.

The refactoring step is all about making your code well engineered. You will want to tidy your code to make it nice and clean without changing any functionality or introducing breaking changes. How will you know if you introduce breaking changes? Your tests that you created in the green step will fail! By having this quick feedback loop we can experiment with designs and implementations when refactoring, but first we have to have completed the green step.

As soon as a test fails, you should go back to the red step and make that test pass as quickly as possible.

By following these steps you will have well-engineered code that hits the acceptance criteria outlined in the tests.

Why?

You may be sceptical of the worth of going through these cycles. Why write tests before programming functionality? There are multiple reasons for this, but here are a few.

I find that if I write tests after I write functionality then subconsciously I am just writing tests to confirm what the code already does. I am not really testing my code against given requirements.

Additionally - and I am sure we've all been at this point - when we hit a stressful and busy period, suddenly testing becomes an afterthought and is not really done as much, if at all. TDD ensures we are testing as we go.

Finally, I find writing my tests first really does make me consider my code design more. I think more about what parameters I will need and how the result of my code will be consumed. I think about these anyway, but by taking a dedicated moment to write my tests and think about only those things then I find that my code is much improved.

By writing tests first we are less stressed, building functionality with confidence and making our code more likely to be maintained with ease.

An important thing to note with TDD is that you should not test implementation details. You want to be testing the inputs vs. outputs of code. Performing the refactoring step helps with this as refactoring should not break your tests but it will change the implementation. If tests rely on the implementation details they will be extremely fragile.

Mocking is often used in TDD. The rationale for mocking is that we want to keep tests quick and we want to ensure that tests do not impact other tests. Mocking, test fixtures and other test doubles can be used to quickly get the system under test into the state we want, so that the tests also run quickly and without impacting other tests. We can quickly set up a mock database, for example, and tear it down just as quick. This means we could, if we wanted to, have a new mock database for each test that requires it to ensure that tests are not impacting other tests.

Moving Forwards

It can be difficult to know how to move forwards with TDD. Which tests should you write next?

It is useful to maintain a list of tests you want to write. This could be a file on your computer or it can

live in the code itself. Jest and Vitest allow for it.todo('A test to implement'), which skips over

the tests and marks them as yet to be implemented; other testing tools have similar functionality available. I

often use these todos as my list of tests I want to implement as part of the TDD process.

When we have got one test passing and the code nice and clean, we need to think about moving to the next test. Which test should you pick? When following TDD, you should pick a test that is easy to implement and that will bring you closer to your goal. You should keep moving forward and gaining confidence, and the easiest test to implement will nudge you in that direction.

At times, you may find that tests become too large to get working quickly. At this point you should split the large test into multiple smaller tests that are easy to read. We want the smallest test that brings us closer to our goal of clean code that hits the requirements. If a test becomes complicated, it probably needs breaking down.

The cycles for TDD might start out slowly but they will speed up as time goes on. This will be as you get more familiar with TDD and its approaches. As you expand your test suites, you may eventually find that you have some redundant tests that cover the same thing in multiple places. These redundant tests should be removed if they do not provide you with any additional confidence that the system under test works correctly.

Caveats

TDD can lead to a lot of test code. You will have at least an equal amount of test code as you do functionality code. That is more code that needs maintaining. That is fine though. Your tests should be small, simple and easy to maintain. Each test should give you confidence that your contracts are designed well and give you confidence that your code works.

You also do not want to be performing TDD for other system's code. You might however want to write some "learning tests"; these are tests that are used to learn and confirm your understanding of a package. Those are fine for learning, but they should be deleted. If you have written a wrapper around a package, then you will want to test those wrappers, not the package itself.

Conclusion

I hope this post has given you more confidence with TDD and that you can see the purpose of TDD more than just 'writing a test before writing functional code'.

I find TDD a powerful tool when writing a new part of a program. When in an older part of the program not developed via TDD, I find it useful to write the tests I wish were there. When those test are there. I can then move forward in a TDD style on new functionality.

A fair bit of my knowledge on this has come from Kent Beck's book Test-Driven Development By Example and Ian Cooper's talk TDD, Where Did It All Go Wrong. I would seriously recommend seeking these out.

I really do enjoy using TDD where I can. I find it makes me a better engineer. You should give it a try and see how it feels and if it improves your craft.

The final words on this really ought to be a repeat of the cycle of TDD:

- Red: Write a test. It won't pass.

- Green: Get that test passing as quickly as possible.

- Refactor: Make the code that made the test pass well engineered, nice and clean.